How do we get AI to provide reliable answers? Give it reliable information

Retrieval Augmented Generation allows for the creation of highly-focused chat-bots that avoid many of ChatGPT's pitfalls.

In the year since the launch of ChatGPT, there has been no shortage of opinions on AI. To some, this marks the beginning of the reign of our robot overlords. To others, AI promises to reshape the way people live and work.

I generally walk the thin line between skeptic and cynic, but I’m an AI optimist.

I have used GPT and other AI tools regularly for the past year, and it has consistently saved me time and cognitive energy. Need a carefully worded response to a passive aggressive e-mail, but worry that your rage might pour out uncontrollably if you write it yourself? Want a quick summary of research paper? Want an outline of a presentation to work off of? AI does all of that.

Unfortunately, my optimism about AI also comes from a place of desperation. Medicine is in dire need of tools that help us complete the ever increasing administrative tasks which accompany patient care. My hope is that AI can be one of these tools.

Excitingly, large language models (LLMs) like ChatGPT have shown potential in this area. In one study, GPT-4 passed the NBME Medical Licensing Exam. In another, patients ranked it higher compared to doctors for answering medical questions on-line.

But LLMs have their limitations. Their knowledge is outdated (although this is improving) and difficult to verify. They also make things up from time to time. These shortfalls have made widespread implementation of AI in health care an understandably contentious topic.

The Limitations of LLMs

ChatGPT learned from a huge mix of information, including both scientific studies and everyday sources like news sites, blogs, and forums. When you ask a question, it pulls an answer from this enormous pool of knowledge. This works for basic questions, but for detailed medical questions this approach has problems.

If you want a large language model to use specific sources for your question, you need to provide those sources. To do this, GPT Plus subscribers can upload PDFs or text documents along with their question. Alternatively, you can copy and paste the text directly into your prompt. But there are limits on how much you can upload and how long your prompt can be.

The quality of an LLMs response also depends on how you phrase your question, a process called “prompt engineering”. For example, a well engineered prompt will start with a sentence like, ‘You are an expert research assistant and your job is to help me research and revise an article on Throckmorton’s Sign...’

If, to get a reliable answer, you have to spend twenty minutes crafting a prompt and pre-researching your question, ChatGPT won't save you much time or effort.

By combining LLMs with other AI tools, however, this process can be automated. A method called Retrieval Augmented Generation (RAG) eliminates the need to provide contextual information and a detailed prompt, while still producing answers from sources you trust.

RAG and Semantic Search

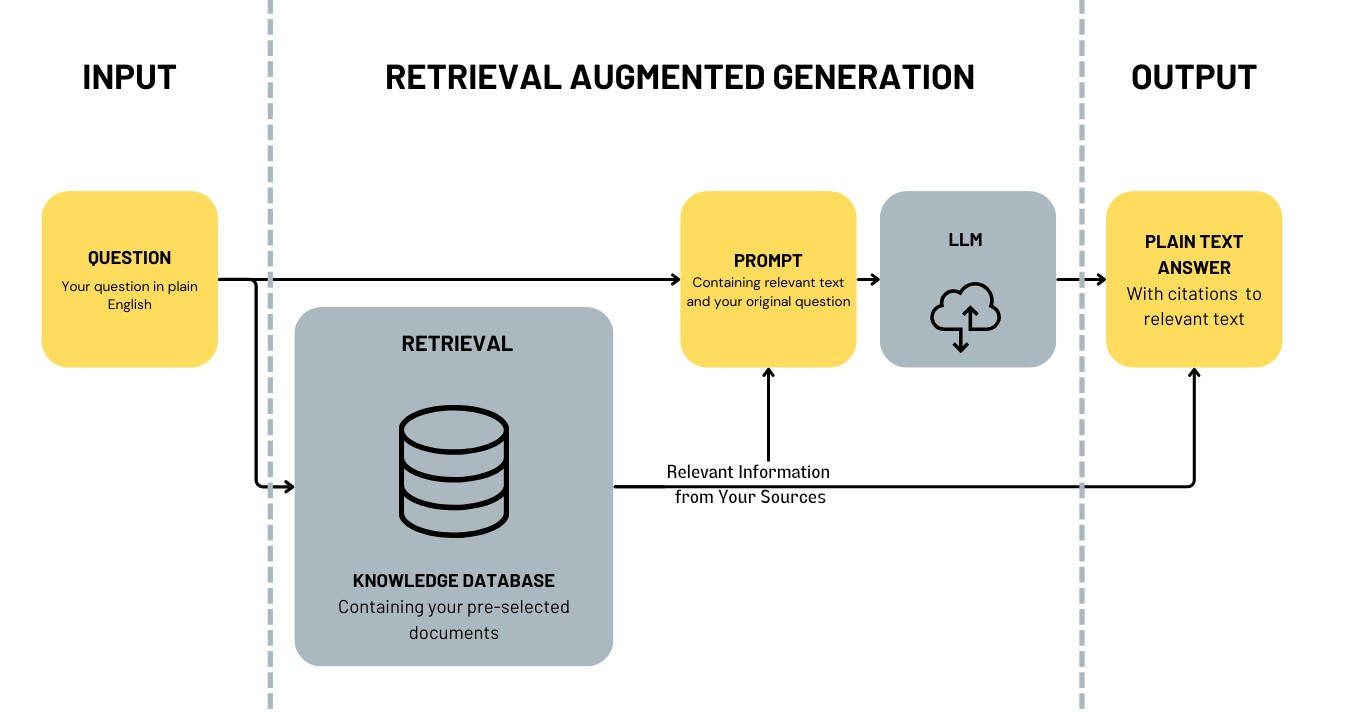

RAG is a major enhancement to current large language models. The key steps in RAG are in its name, Retrieval, Augmentation, and Generation:

- Retrieval: relevant information is found in pre-selected documents to inform the response.

- Augmentation: Your question is ‘augmented’ with this information.

- Generation: Your question, along with the contextual information, is sent to an LLM for ‘generation’ of an answer.

To find relevant context, many RAG solutions use a method called "semantic search" which is a way of finding information that goes beyond looking for specific keywords. Instead, semantic search uses the meaning of the text to search. This is helpful in health care applications, where the right keyword isn’t always clear.

Before you search with RAG, you need to have documents to search through. One advantage of RAG over a plain large language model like is that you choose which documents to use. You can pick a wide range of documents to create a general knowledge base, or a select few for a highly specific task.

When you ask a question, the RAG searches through the provided documents for relevant information. It then sends that information, along with your question and a pre-defined prompt, to an LLM to generate an answer.

The overall process looks like this:

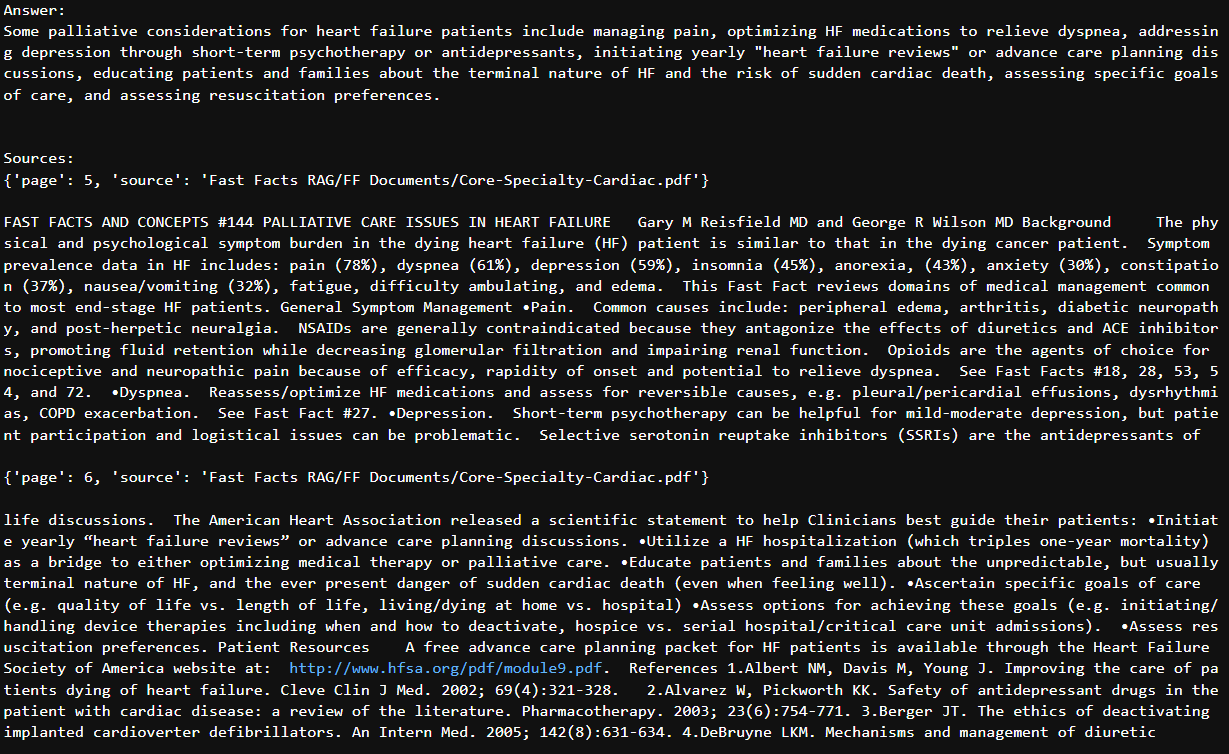

When I ask a RAG: “What are some palliative care considerations for heart failure patients?” the final prompt sent to the LLM is:

Prompt: Use the following pieces of context to answer the question at the end. If you can’t find an answer in the provided context, do not make an answer up. Be concise and limit your answer to the information included within the provided context.

Context: {the relevant text from your document database}

Question: What are some palliative considerations for heart failure patients?

Here’s how a RAG bot I provided with the MyPCNow FastFacts Core Curriculum PDFs responds:

The Benefits of RAG

Since the answers generated through RAG are from source documents you provide, the generated answer includes citations. This is crucial in health care applications, where verifiability is required. This also expands the educational potential for these tools, allowing learners to access information within curated collections of resources and explore primary texts with ease.

The flexibility of RAG makes it particularly helpful for medical applications. It can be employed as a broad solution, encompassing a wide array of medical publications in an expansive knowledge base. Alternatively, it can be used for focused purposes, such as Chat Bots to streamline searches for department-specific resources or to help locate information within institutional policies and best practice guidelines.

RAG could even be implemented in patient charts, allowing you to ask a natural language question about a patient’s history and receive a plain-text answer and links to the source of that information.

If you’re interested in trying this out, many companies offer off-the-shelf “chat with your documents” AI bots which use RAG. For the more technologically inclined, there are open-source libraries to create RAG solutions from scratch with relative ease. I’ll be writing about this process in an upcoming post.

Is AI the answer to all of our problems?

Not everyone is excited about AI, and for good reasons. AI isn’t a perfect tool. LLMs, since they were trained on text written by people, have the potential for the same biases we do. Many writers and creators are angry about LLMs, since their works were fed into these models without permission or compensation. AI is also progressing rapidly, raising existential concerns about what this technology might do if it achieves general intelligence.

Despite these concerns, AI is here, and it is here to stay. We must be diligent with how we implement these tools, but we shouldn’t stifle their potential to improve health care both for patients and care givers.

With our expanding patient populations and an already overstretched workforce, any innovation that helps providers spend time with their patients warrants our attention.